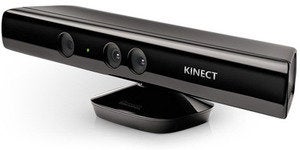

Ashutosh Saxena bought an Xbox to play computer games at home, but discovered that the Kinect motion-detection technology it includes provides a rich tool for his robotics lab where he’s trying to create robots that learn what humans are up to and try to help out.

Saxena, a professor of computer science at Cornell University, says the Xbox One announced last week will boost the realm of activities his robots can figure out because its HD camera will be able to detect more subtle human motions, such as hand gestures.

“Modeling hand is extremely hard,” he says. “Hands move in thousands of ways.”

With the current Kinect, his models consider hands as a single data point so they can’t analyze finger motions, for example, he says. The camera’s resolution is just about good enough to identify a coffee mug but not cell phones and fingers, he says.

Kinect’s 3D imaging is far superior and less expensive than the 2D technology he used before. That was good for categorizing a scene or detecting an object, but not for analyzing motion, he says.

With input from Kinect sensors, his algorithms can determine what a person is doing given a range of activities and then perform appropriate predetermined tasks. For example the motion sensors in combination with algorithms running on Saxena’s Ubuntu Linux server could identify a person preparing breakfast cereal and retrieve milk from the refrigerator. Or it could anticipate that the person will need a spoon and get one or ask if the person wants it to get one.

“It seems trivial for an able-bodied person,” Saxena says, “but for people with medical conditions it’s actually a big problem.”Similarly it could anticipate when a person’s mug is empty and refill it, as shown in this video:

Learning robots

Robots in his lab can identify about 120 activities such as eating, drinking, moving things around, cleaning objects, stacking objects, taking medicine, and other regular daily activities, he says.

Attached to telepresence systems, learning robots could carry around cameras at remote locations so a participant could control where the camera goes but the robot itself would keep it from bumping into objects and people. It could also anticipate where the interesting action in a scene is going and follow it, he says.

Attached to a room-vacuuming robot, a sensor could figure out what is going on in a room such as viewing television and have the robot delay cleaning the room or move on to another one.

Assembly-line robots could be made to work more closely with humans. Now robots generally perform repetitive tasks and are separated from people. Learning robots could sense what the people are doing and help or at least stay out of the way.

Assistant robots could help at nursing facilities, determining if patients have taken medications and dispensing them.

He says some of these applications could be ready for commercial use within five years.

The difficult part will be writing software that can analyze human activity, identify specific task that are being performed by people, anticipate what they are likely to do next and figure out what they can do that’s useful, Saxena says.

0 comments:

Post a Comment